Entopy is delighted to be attending Mobile World Congress (MWC) in Barcelona as part of a delegation from the Department for Business and Trade. We are privileged to be attending with our friends from the New Anglia Local Enterprise Partnership and Invest Norfolk and Suffolk, alongside some fantastic, innovative UK businesses.

MWC is a huge event with over 80,000 people attending from across the globe. It is a chance to the technology industry to come together, learn about the latest innovations and technology trends and network with like-minded people.

Entopy will have a presence on the UK DBT stand located in Hall 7 (stand 7B41). We will be showcasing our leading AI-enabled Digital Twin platform and novel AI-micromodels technology, and we will be in attendance across 26/27/28 February.

We are excited to explore, learn and meet new people. For our friends in Europe, this is a great opportunity to connect in person. For anyone visiting, we encourage you to reach out to us before the event and schedule a meeting.

This blog gives an introduction to, and a snapshot update, of our micromodel technology as well as our future roadmap. The technology is designed to address challenges in applying Artificial Intelligence into real-world, dynamic operational contexts to support the capture and delivery of effective decision intelligence.

What are micromodels?

The concept is pretty simple. We look at a large, complex problem and break it up into smaller, more specific chunks, deploying targeted AI models to specific parts of the problem. We then network multiple models together with other data and models, creating a dynamic network of micromodels.

The technology addresses a few key challenges in the deployment of AI in complex, dynamic and multistakeholder environments.

Initial use case.

The initial iteration of our micromodel technology has naturally been use-case-led, delivering dynamic intelligence of future traffic flows to a major UK port, which we will elaborate more specifically on in a later blog and case study. But at a high-level, it required micromodels to be deployed as part of a Digital Twin across the strategic road network of the port.

This required us to develop AI models capable of predicting traffic flows in specific areas of road, and leverage data from third-party models such as weather forecasts and network models together with real-time event-based data such as traffic accidents and scheduled road maintenance, with an overarching orchestration model.

This was a perfect initial use case for the technology as road networks naturally have many variables – they are dynamic. There are regular occurrences of ‘black swan’ events, and many stakeholders involved, have a need for iterative deployment (more so in the extension of an initial twin over time) and within the context of the use case, supporting operators to make more data-driven decisions regarding traffic management protocols, a high need for explainable outputs to build confidence in the intelligence and ultimate, drive action.

And, although use-case-led, the initial iteration of Entopy’s micromodel technology has wide applicability. By breaking the overall problem into smaller, more specific chunks, you create ‘atomic models for atomic problems’. For example, the micromodels deployed for this use case can easily support use cases for other infrastructures such as airports, councils, shopping centres, stadiums etc. where predictive intelligence regarding traffic movement is needed.

Over the past months, we have been able to validate the technology, prove key aspects, achieve many key technical milestones, automate key aspects of the technology to ensure high repeatability and transferability to other use cases and flesh out our future roadmap.

Model performance.

The performance of AI models within the use case has been exceptional. By breaking the problem into smaller chunks, increasing the resolution of specific parts of the problem. We have been able to get a very good model/data fit very quickly.

The models are purposely small, with the initial traffic flow model comprising only 26 features. Multiple instances of these models are deployed, operating independently, and networked together. Not only does this support dynamic intelligence but also acts as a good check and balance across the network.

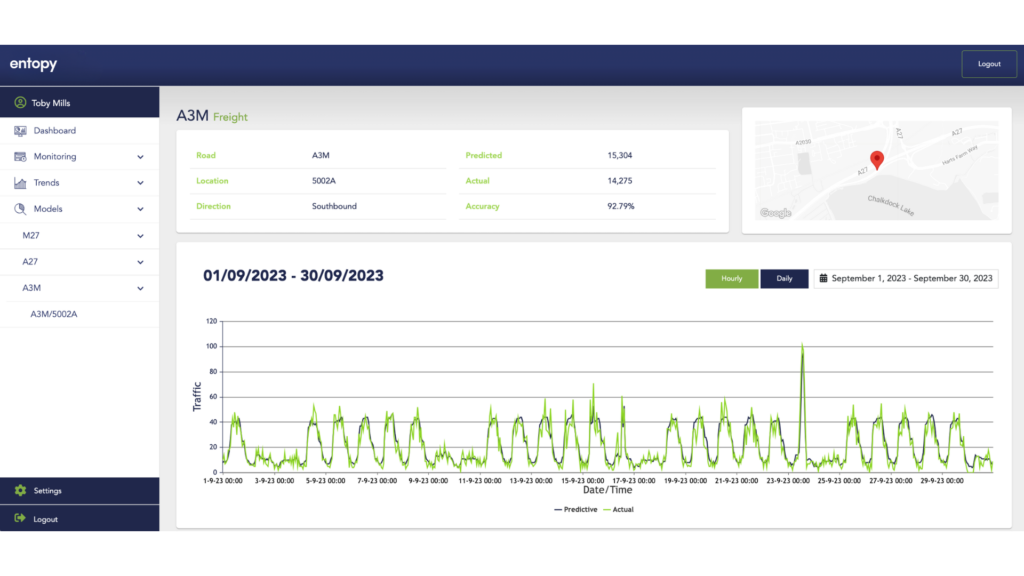

We have now deployed many models across the UK with an average accuracy of >85%. Below is a screenshot of a model recently deployed showing the model capturing a significant and outlining spike in traffic flow. The blue line is the prediction, the green line is what happened.

Network performance.

Each of the models feeds into our core software which uses concepts such as RDF and ontology to orchestrate the outputs together with other data inputs. Ultimately, the network informs an overarching algorithm that predicts traffic to the port.

We have measured the efficacy of the network against the port’s commercial data with an accuracy of >90% since initial deployment.

The network of micromodels has effectively captured real-time event-based data such as traffic accidents and road maintenance, updating the overall prediction accordingly.

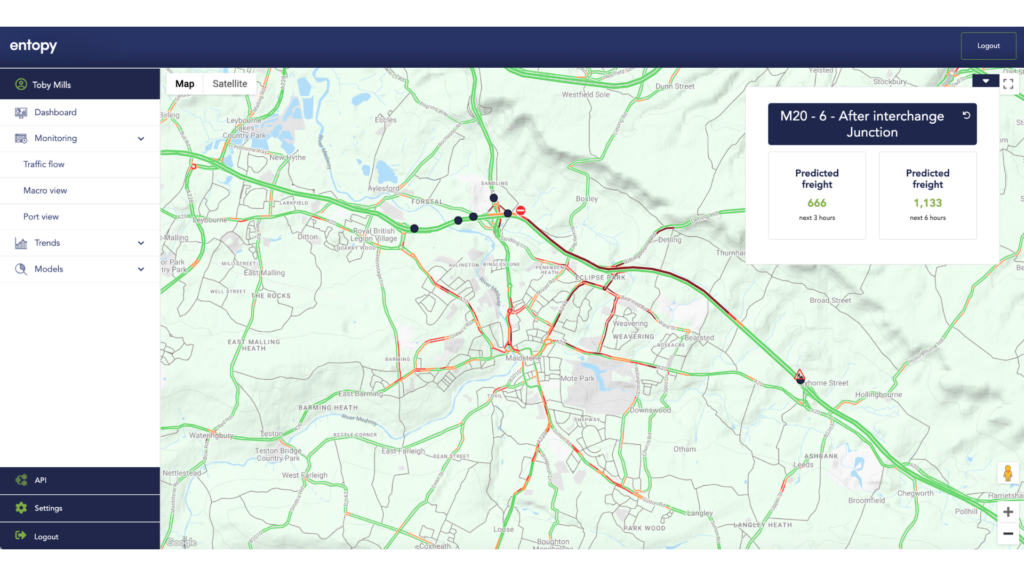

The image below shows some micromodels deployed within our macro view interface. Each of the blue dots is an independent AI model, predicting traffic at that specific part of the road. These form nodes on a network with real-time events such as congestion, traffic accidents and road maintenance being captured, classified, and introduced into the network based on their location.

Addressing the obvious downside.

What we are effectively describing is a multi-layer perceptron (MLP) but built node by node or layer by layer with each being an independent AI model.

There are advantages but the obvious challenge is the time it takes to deploy. And it is here that Entopy’s micromodel technology development has focused.

We have developed scripts and tools that automate the entire model deployment process meaning we can deploy hundreds of instances of models in a very short period. This has been extensively tested and will be repeated to other use cases.

What’s next?

Our ambition for the micromodel technology is to be able to deliver effective operational and predictive intelligence to any operational environment. We are growing our model library. As we deliver new and extended use cases, we will naturally grow a powerful library of micromodels that can quickly ‘click in’ to new use cases.

But we have a few things in the roadmap that will accelerate this. Our recently announced partnership with the University of Essex and their Institute for Analytics & Data Science (IADS) is the start of a long-term partnership to develop model generalisation and model federation.

These technology milestones will rapidly accelerate our timeline to being able to realise our overall mission and ensure we can deliver effective operational and predictive intelligence to many more use cases.

In October, Entopy’s CEO, Toby Mills was invited to participate in an industry roundtable with the Minister for Transport and Roads, Richard Holden. The event was hosted by the Connected Places Catapult and was timely following the culmination of the first Freight Innovation Fund Accelerator and the start of Freight week.

Around 30 industry leaders and innovators from the freight sector attended including senior officials from the Department for Transport (DfT). The conversation centred around key themes including the role of innovation in supporting commercial growth in the UK freight sector, how public and private sectors can collaborate to deliver on the objectives of the Future of Freight Plan as well as opportunities for innovators to showcase their products and companies.

The event was a fantastic opportunity to showcase Entopy and what is had achieved in the 6-month Freight Innovation Fund accelerator programme. Toby Mills said: “It was a great privilege to have been invited to attend the roundtable event with the minister. The discussion was very positive with lots of really interesting contributions.

It was also a great opportunity to spend time with the minister, showcasing Entopy and what we have been able to achieve in a very short period within the Freight Innovation Fund Accelerator programme.”

Editor note: Richard Holden was promoted to Minister Without Portfolio and Conservative Party Chairman on the 13th November 2023.

Entopy and the University of Essex have recently secured Innovate UK funding to proceed with a Knowledge Transfer Partnership worth over £260k. The partnership will extend Entopy’s existing capabilities in data analytics and applied Artificial Intelligence. Securing the KTP is the first step in a long-term partnership between Entopy and the university’s leading Institute for Analytics and Data Science (IADS).

Entopy is an innovative software business based in Cambridge, UK focusing on delivering highly effective operational and predictive intelligence to businesses, using a combination of digital twin and Artificial Intelligence. Its foundational software uncovers hidden answers across large, complex, and disparate datasets to deliver historical, real-time, and predictive insights.

Building on its highly innovative software platform, Entopy has developed a novel approach to Artificial Intelligence called micromodels. The technology breaks large, complex predictive problems into smaller, more specific ‘chunks’, deploying targeted AI models to specific parts of the problem. Using its foundational software, outputs from respective models are networked together with real-time data to deliver dynamic networks of micromodels capable of providing effective predictive intelligence in complex operational settings.

The technology also addresses major challenges in the effective application of AI in complex, real-world environments, enabling data segmentation across multiple stakeholders to be maintained, supporting explainable predictive outputs and iterative deployment of complex predictive models.

The partnership will progress Entopy’s micromodels roadmap, focusing on the generalisation and federation of micromodels to increase the efficacy of Entopy’s software and reduce deployment time across a broad range of use cases. Positive feedback from Innovate UK stated: “Building on its novel software platform, Entopy is progressing the state of the art in this field.”

KTPs offer a unique opportunity to access funding for innovation and growth, and through collaborating with the University of Essex, Entopy will be working with leading academics Professor Haris Mouratidis and Dr Mays Al-Naday from the School of Computer Science and Electronic Engineering (CSEE), who will share their expertise throughout the project.

Professor Haris Mouratidis, Director of the Institute for Analytics and Data Science commented: “We are very excited to work with Entopy on this KTP. The project will enable us to combine our world-leading research on micromodel technology and federated learning with Entopy’s leading intelligence platform to provide innovative solutions to a fast-developing, but also highly complex, sector in that Entopy is operating. The project is part of a larger scale collaboration with Entopy around data science, artificial intelligence and cybersecurity aiming to revolutionise predictive intelligence in complex operational environments.”

Dr Mays Al Naday added: “This is an exciting opportunity to join forces with Entopy in delivering breakthrough innovation of AI services, expanding the digital twinning capabilities offered to Entopy’s clients. It is also a starting point of what we foresee as a long and prosperous partnership on research and innovation in AI, cloud services and cybersecurity; supporting general digital transformation in the UK.”

The KTP will support funds recently awarded to Entopy through the prestigious Freight Innovation Fund Accelerator (FIF). This programme, funded by the Department for Transport and delivered by Connected Places Catapult, is designed to support, and finance innovators to trial their solutions within real-world environments and prepare their businesses to go to market. FIF identified a major challenge in delivering predictive insights to the sector, and with the funding awarded, Entopy has successfully deployed its novel micromodel technology, accurately predicting freight traffic flows to a major UK port.

Toby Mills, CEO of Entopy said: “We are excited to formalise our partnership with the University of Essex. From day one, it was clear that this relationship would flourish, with alignment of research focus and a healthy dynamic between the teams.

The next milestones for our micromodel’s technology focus on broadening use case applicability, building a library of generalised models and developing novel federation techniques and are critical to extending the capability to other sectors and use cases.”

Robert Walker, Head of Business Engagement at the University of Essex commented: “We are thrilled to embark on this partnership with Entopy, funded via Innovate UK, and we are hopeful it is the first of many with this exciting business. Entopy, an innovative force in the field of data analytics and applied Artificial Intelligence, typify the knowledge-hungry business we strive to work alongside. This partnership is a testament to our commitment to fostering collaboration with industry leaders to advance cutting-edge technology. I am excited to see how the project progresses.”